HQ Camera

Outline

In several of my projects I intend to use the HQ camera to do surveillance tasks with the Raspberry Pi. Quite recently the picamera2 Python module has emerged as a replacement of the legacy PiCamera Python library. The official documentation of the picamera2 project can be found here:

https://datasheets.raspberrypi.com/camera/picamera2-manual.pdf

To get familiar with picamera2 I worked through some examples using sample code from the documentation and adapting them when necessary. To apply what I have learned I tried to solve some image processing tasks using mainly Python modules numpy, opencv and scikit-image.

Examples

Setup

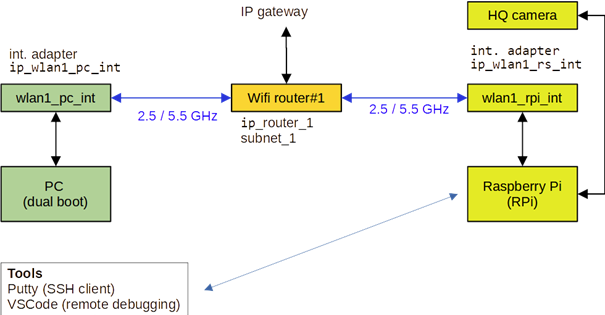

A PC is used to manage the Raspberry Pi (RPi). PC and RPi communicate via a Wifi router.

This setup is just one possible example. What is important here is:

- PC and RPI communicate via a wireless connection using a router

- The HQ camera is attached to the RPI

- Loging into the RPI shall be possible via SSH

Python programs are run on the RPi to control the HQ camera (capture images). Postprocessing of images is mostly done on the PC. A SSH/SFTP client – such as FileZilla – is used to copy files from the RPi to the PC. Starting programs on the RPi use either VSCode on the PC (for remote debugging) or Putty.

capturing images

The Python script picamera2_example_1.py implements some features which I intend to use later in some of my projects.

The program implements this workflow:

- initialisation of HQ-camera

- some important properties of the camera are queried and printed

- capturing an image as numpy array and saving

numpyarray to file withnumpy.save()method. - capturing an image as numpy array, saving array data as jpeg file using OpenCv to convert array to jpeg

- capturing image directly into jpeg-file

- query metadata properties of the camera and print

The following sub-section provide more details on each step of the workflow.

Initialisation

The initial configuration of the HQ-camera is done with function initCamera – stored in a Python module picamera2Utils.py.

initCamera accepts a dictionary with configuration parameters. Currently the dictionary uses the following keys:

| key | description |

width | nr of pixels |

height | nr of pixels |

wait_after_start | seconds to wait before capturing any image(s) |

exposure_time | the exposure time in microseconds |

analogue_gain | controls the sensitivity (see manual for details) |

Some more info about these parameters …

The sample program initialises the camera. Then some important camera properties are queried and displayed like this:

# querying some camera properties

pp = pprint.PrettyPrinter(indent=4)

print("\ncamera_controls")

pp.pprint(picam2.camera_controls)Properties are returned in dictionary:

camera_controls =

{ 'AeConstraintMode': (0, 3, 0),

'AeEnable': (False, True, None),

'AeExposureMode': (0, 3, 0),

'AeMeteringMode': (0, 3, 0),

'AnalogueGain': (1.0, 22.2608699798584, None),

'AwbEnable': (False, True, None),

'AwbMode': (0, 7, 0),

'Brightness': (-1.0, 1.0, 0.0),

'ColourCorrectionMatrix': (-16.0, 16.0, None),

'ColourGains': (0.0, 32.0, None),

'Contrast': (0.0, 32.0, 1.0),

'ExposureTime': (60, 674181621, None),

'ExposureValue': (-8.0, 8.0, 0.0),

'FrameDurationLimits': (19989, 674193371, None),

'NoiseReductionMode': (0, 4, 0),

'Saturation': (0.0, 32.0, 1.0),

'ScalerCrop': ( libcamera.Rectangle(0, 0, 128, 128),

libcamera.Rectangle(0, 0, 4056, 2160),

None),

'Sharpness': (0.0, 16.0, 1.0)

}example: analogue gain (sensitivity similar to ISO) may be chosen in the range [1, 22] (exactly: 22.2608699798584). exposure time : range [60 microseconds, 674.181621 s] -> increasing gain by factor a and reducing exposure time by 1/a cancel each other. -> brightness of image remains unchanged

Capture image as array and save to file

An uncompressed image is captured into an array (numpy):

img_numpy = picam2.capture_array("main")

The array is saved to file using method save of numpy:

np.save(numpy_file, img_numpy)

Since no image compression is used the file is quite large. The duration of capturing the image and storing it on the RPI is measured.

Capture image as numpy array and save to jpeg file

Again the the image is captured first as an array (see above) and then converted to jpeg format and saved to a file.

img_numpy = picam2.capture_array("main")

# reordering color channels because opencv assumes BGR order

# instead of RGB

cv2.imwrite(numpy_file_to_jpg, img_numpy[:,:,[2, 1, 0]])OpenCv assumes that color images are stored in order BGR (blue-green-red). However the array has color ordering RGB (red-green-blue) after capturing. Therefore the color ordering must be changed while saving array to jpeg file. Due to image compression the file size is much smaller than with saving the array uncompressed.

Capture image directly and save to jpeg file

This time the image is captured, compressed and saved to a jpeg file directly:

# capturing image directly to file as jpeg

picam2.capture_file(jpg_file)Camera metadata

Metadata of the camera may be queried into a dictionary and displayed like this:

# getting metadata info

metadata = picam2.capture_metadata()

print("\nmetadata")

pp.pprint(metadata)

metadata

{ 'AeLocked': True,

'AnalogueGain': 1.0,

'ColourCorrectionMatrix': ( 2.006544589996338,

-0.8326654434204102,

-0.17387104034423828,

-0.26965245604515076,

1.812162160873413,

-0.542517900466919,

-0.11046533286571503,

-0.49719658493995667,

1.6076619625091553),

'ColourGains': (3.584270477294922, 1.485352873802185),

'ColourTemperature': 6499,

'DigitalGain': 1.0143702030181885,

'ExposureTime': 985,

'FocusFoM': 9204,

'FrameDuration': 19989,

'Lux': 28743.7890625,

'ScalerCrop': (108, 440, 3840, 2160),

'SensorBlackLevels': (4096, 4096, 4096, 4096),

'SensorTemperature': 22.0,

'SensorTimestamp': 341572047000}Discussion

Whichever method of capturing / storing images should be favoured?

It greatly depends on how the image shall be further processed:

- If an image shall be processed numerically directly after capturing, transferring it to a file is wasteful / time consuming. Instead capturing into an array should be more efficient.

- If the image needs to be transferred over a network (via HTTP, FTP, SFTP, websockets) image data must be made available as file, preferably with small size for fast transfer over the communication channel. In such a case capturing and saving to jpeg file is the fastest method (no jpeg conversion with OpenCv).

The camera configuration uses a fixed exposure time set by control parameter ExposureTime. That is ok, if we know the lightning condition under which the image has been captured. When we have to cope with varying lightning conditions we would rather like to have the the exposure time automatically adjusted.

The simplest approach is not to set the exposure time explicitly. It works but under poor lightning the exposure time may be far too large for scenes with moving objects which will show up blurred.

Ideally I would like the camera to adjust exposure time within a user defined range [exposure_time_min, exposure_time_max]. If the camera would have to choose an exposure time greater than exposure_time_max as a result of poor light conditions, the camera should increase automatically analogue gain until the actual exposure time is again within the requested range.

Currently however, I do not know how to implement such a feature elegantly.

Downloads: